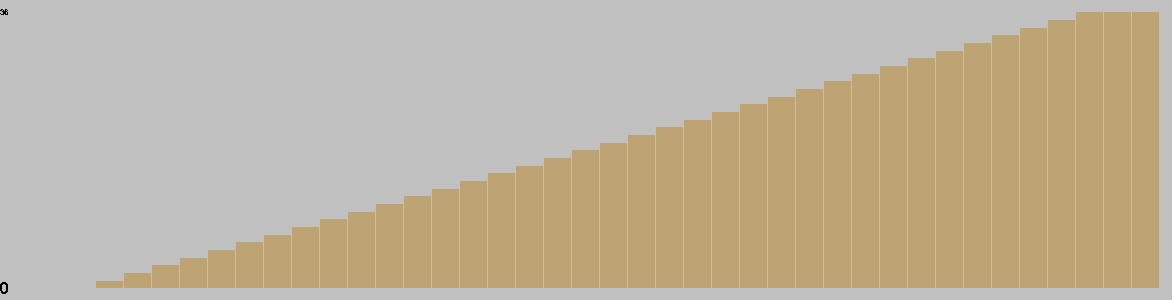

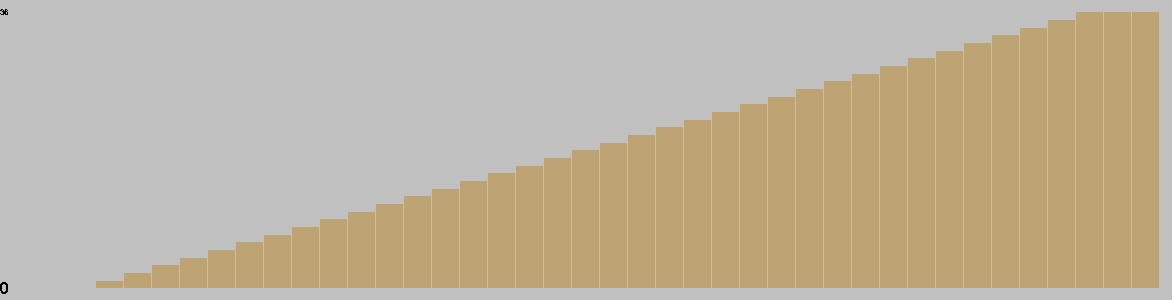

Change in Material Per Turn

This chart is based on a single playout, and gives a feel for the change in material over the course of a game.

Two players, vertical and horizontal, compete to claim regions of the board.

A region is a maximal set of orthogonally contiguous empty points.

A line is a set of three orthogonally contiguous empty points on the same row or column.

A region is owned by Vertical if it contains no horizontal lines, and by Horizontal if it contains no vertical lines. A region is free if it's owned by neither player.

Vertical plays first, then turns alternate. On their turn, a player must either pass or place a stone on an empty point which is part of a free region. At all times, every region must include at least one line.

When both players pass in succession, the game ends. A player's score is the number of points in the regions that they own, plus a komi (see below) in the case of Horizontal. The player with the highest score wins.

The komi is the number of points which is added to Horizontal's score at the end of the game as a compensation for playing second. Before the game starts, the first player chooses the value of komi, and then the second player chooses sides. To avoid ties, it is suggested that komi be of the form n + 0.5, where n is a whole number.

General comments:

Play: Combinatorial

Family: Combinatorial 2016

Mechanism(s): Territory

Components: Board

| BGG Entry | Linage |

|---|---|

| BGG Rating | 7.62 |

| #Voters | 5 |

| SD | 1.47567 |

| BGG Weight | 0 |

| #Voters | 0 |

| Year | 2016 |

| User | Rating | Comment |

|---|---|---|

| luigi87 | 8.6 | My game. Surprisingly strategic for its minimal equipment. |

| seneca29 | N/A | astratto go set |

| mrraow | 9 | This felt genuinely original, as well as being interesting to play; and so gets my vote for abstract game of the year (2016). |

| CarlosLuna | 8.5 | |

| Kaffedrake | 5 | Linage feels like it could be satisfyingly strategic, although it's not clear exactly what good strategy should look like. There is a kind of emergent quality in any case, where stones played project... something? shapitude? in various directions. Ladder-breakers are important I think? And you have to pay attention to... stuff. I'm not a huge fan of the komi bidding, though. There should probably be a few stones in player colours to mark finished areas. |

| fogus | 7 |

| AI | Strong Wins | Draws | Strong Losses | #Games | Strong Win% | p1 Win% | Game Length |

|---|---|---|---|---|---|---|---|

| Random | |||||||

| Grand Unified UCT(U1-T,rSel=s, secs=0.01) | 36 | 0 | 1 | 37 | 97.30 | 54.05 | 43.38 |

| Grand Unified UCT(U1-T,rSel=s, secs=0.03) | 36 | 0 | 7 | 43 | 83.72 | 41.86 | 44.44 |

| Grand Unified UCT(U1-T,rSel=s, secs=0.07) | 36 | 0 | 3 | 39 | 92.31 | 41.03 | 45.62 |

| Grand Unified UCT(U1-T,rSel=s, secs=0.20) | 36 | 0 | 9 | 45 | 80.00 | 35.56 | 47.38 |

| Grand Unified UCT(U1-T,rSel=s, secs=0.55) | 36 | 0 | 6 | 42 | 85.71 | 47.62 | 44.79 |

| Grand Unified UCT(U1-T,rSel=s, secs=1.48) | 36 | 0 | 8 | 44 | 81.82 | 52.27 | 45.32 |

Level of Play: Strong beats Weak 60% of the time (lower bound with 90% confidence).

Draw%, p1 win% and game length may give some indication of trends as AI strength increases; but be aware that the AI can introduce bias due to horizon effects, poor heuristics, etc.

| Size (bytes) | 30390 |

|---|---|

| Reference Size | 10577 |

| Ratio | 2.87 |

Ai Ai calculates the size of the implementation, and compares it to the Ai Ai implementation of the simplest possible game (which just fills the board). Note that this estimate may include some graphics and heuristics code as well as the game logic. See the wikipedia entry for more details.

| Playouts per second | 3776.00 (264.83µs/playout) |

|---|---|

| Reference Size | 1734906.32 (0.58µs/playout) |

| Ratio (low is good) | 459.46 |

Tavener complexity: the heat generated by playing every possible instance of a game with a perfectly efficient programme. Since this is not possible to calculate, Ai Ai calculates the number of random playouts per second and compares it to the fastest non-trivial Ai Ai game (Connect 4). This ratio gives a practical indication of how complex the game is. Combine this with the computational state space, and you can get an idea of how strong the default (MCTS-based) AI will be.

| 1: Player 1 win % | 45.85±3.07 | Includes draws = 50% |

|---|---|---|

| 2: Player 2 win % | 54.15±3.10 | Includes draws = 50% |

| Draw % | 0.30 | Percentage of games where all players draw. |

| Decisive % | 99.70 | Percentage of games with a single winner. |

| Samples | 1000 | Quantity of logged games played |

Note: that win/loss statistics may vary depending on thinking time (horizon effect, etc.), bad heuristics, bugs, and other factors, so should be taken with a pinch of salt. (Given perfect play, any game of pure skill will always end in the same result.)

Note: Ai Ai differentiates between states where all players draw or win or lose; this is mostly to support cooperative games.

Rotation (Half turn) lost each game as expected.

Reflection (X axis) lost each game as expected.

Reflection (Y axis) lost each game as expected.

Copy last move lost each game as expected.

Mirroring strategies attempt to copy the previous move. On first move, they will attempt to play in the centre. If neither of these are possible, they will pick a random move. Each entry represents a different form of copying; direct copy, reflection in either the X or Y axis, half-turn rotation.

| Game length | 44.99 | |

|---|---|---|

| Branching factor | 43.50 | |

| Complexity | 10^65.66 | Based on game length and branching factor |

| Computational Complexity | 10^5.91 | Saturation reached - accuracy very high. |

| Samples | 1000 | Quantity of logged games played |

| Distinct actions | 95 | Number of distinct moves (e.g. "e4") regardless of position in game tree |

|---|---|---|

| Killer moves | 1 | A 'killer' move is selected by the AI more than 50% of the time Killers: Play Horizontal |

| Good moves | 23 | A good move is selected by the AI more than the average |

| Bad moves | 72 | A bad move is selected by the AI less than the average |

| Samples | 1000 | Quantity of logged games played |

This chart is based on a single playout, and gives a feel for the change in material over the course of a game.

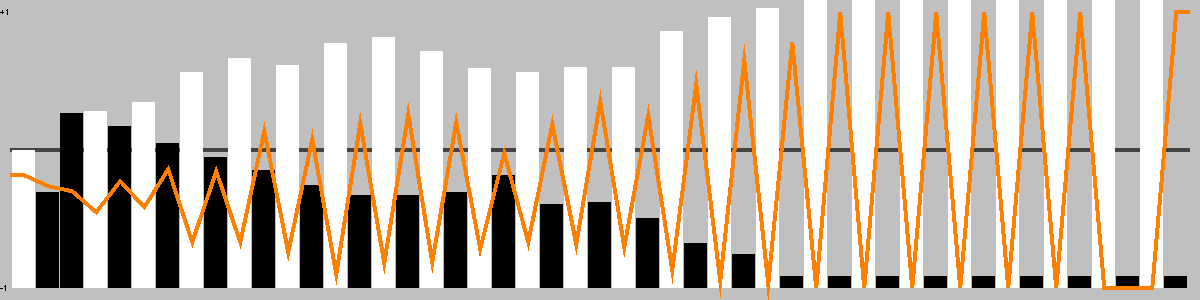

This chart shows the best move value with respect to the active player; the orange line represents the value of doing nothing (null move).

The lead changed on 10% of the game turns. Ai Ai found 2 critical turns (turns with only one good option).

Overall, this playout was 69.39% hot.

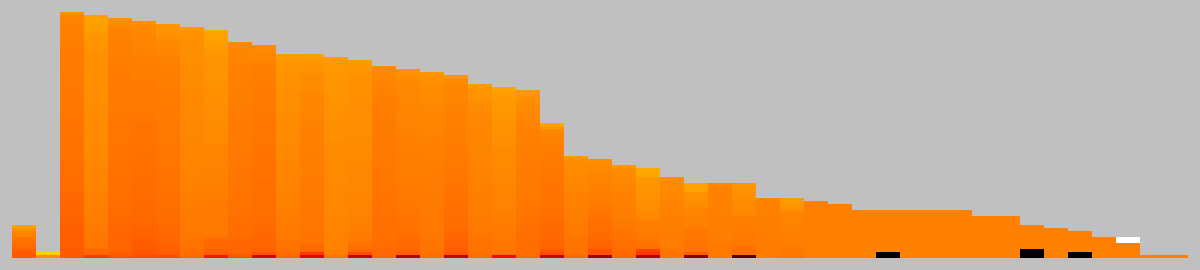

This chart shows the relative temperature of all moves each turn. Colour range: black (worst), red, orange(even), yellow, white(best).

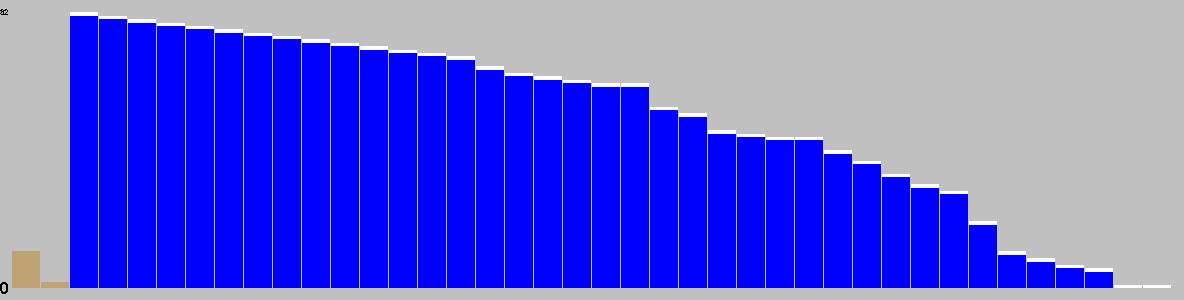

Table: branching factor per turn.

This chart is based on a single playout, and gives a feel for the types of moves available over the course of a game.

Red: removal, Black: move, Blue: Add, Grey: pass, Purple: swap sides, Brown: other.

| 0 | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| 1 | 11 | 33 | 1837 | 147807 | 6115241 |

Note: most games do not take board rotation and reflection into consideration.

Multi-part turns could be treated as the same or different depth depending on the implementation.

Counts to depth N include all moves reachable at lower depths.

Inaccuracies may also exist due to hash collisions, but Ai Ai uses 64-bit hashes so these will be a very small fraction of a percentage point.

22 solutions found at depth 4.

| Moves | Animation |

|---|---|

| Komi=0.5,Play Vertical,c5,i3 |  |

| Komi=0.5,Play Vertical,c5 |  |

| Komi=1.5,Play Vertical,g5 |  |

| Komi=2.5,Play Horizontal,c9 |  |

| Komi=3.5,Play Horizontal,d1 |  |

| Komi=3.5,Play Horizontal,c2 |  |

| Komi=3.5,Play Horizontal,g9 |  |

| Komi=4.5,Play Vertical,b2 |  |

| Komi=4.5,Play Horizontal,f2 |  |

| Komi=6.5,Play Vertical,i2 |  |

| Komi=9.5,Play Vertical,e5 |  |

| Komi=8.5,Play Vertical,h1 |  |

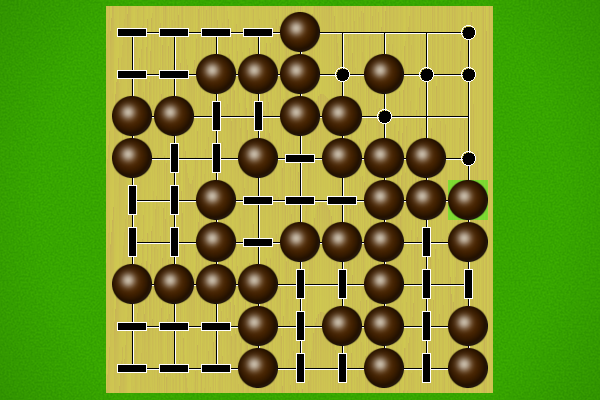

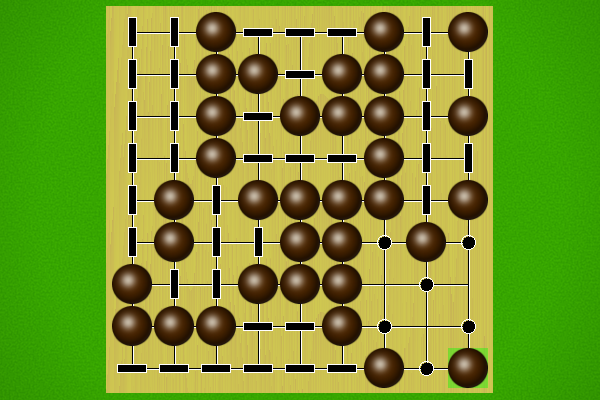

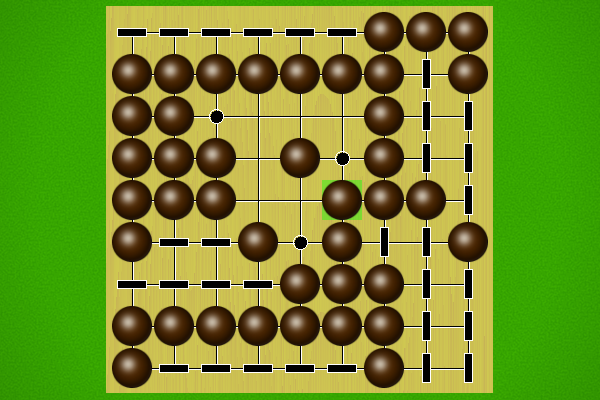

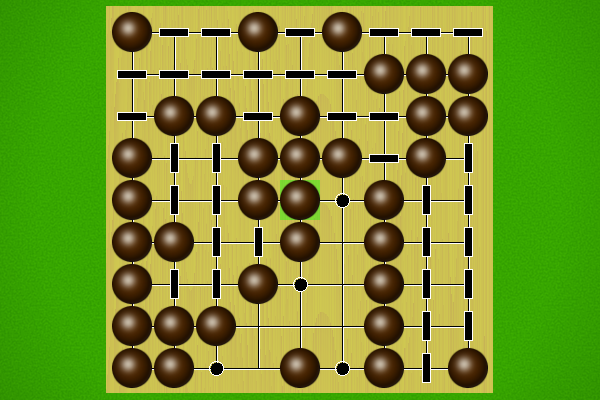

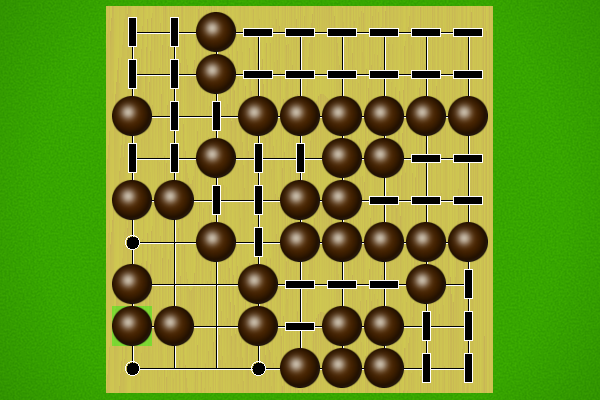

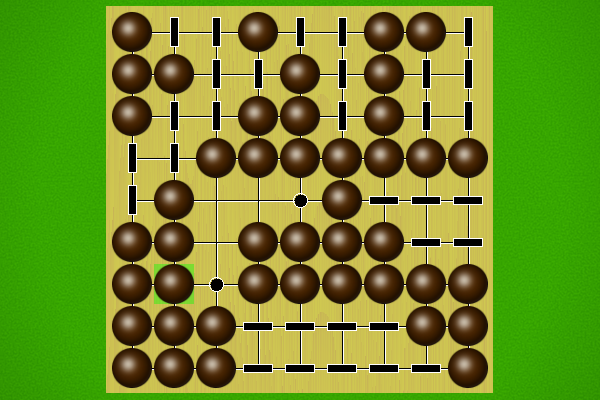

| Puzzle | Solution |

|---|---|

Vertical (p1) to win in 9 moves | |

Vertical (p1) to win in 8 moves | |

Horizontal (p2) to win in 7 moves | |

Vertical (p1) to win in 7 moves | |

Horizontal (p2) to win in 7 moves | |

Vertical (p1) to win in 3 moves |

Selection criteria: first move must be unique, and not forced to avoid losing. Beyond that, Puzzles will be rated by the product of [total move]/[best moves] at each step, and the best puzzles selected.